The Value of A/B-Testing, Split-Testing and Multivariate testing

We all know them: A/B tests - those friendly helpers when it comes to comparing multiple versions of an item.

In Mautic, there is extensive A/B testing for emails: It lets you compare the rates for opening the email, click-through, as well as the invocation of downloads and forms.

However, there is another relevant channel that we love, and that we would love to have A/B testing options for, and that is Mautic's Focus Items.

Questions for those would be a bit more complex than for emails... While basically it is all about "Which of my focus item variants (regarding content, optics, behaviour) perform best?", the problem is that for "perform best" there is a vast variety of possible criteria.

Such as...

- Click-through-rate (for type "Link") / Submission-rate (for type "Form")

- Conversion rate (for whatever conversion goals may be defined)

- Quantitative behaviour after Focus Item ( subsequent dwell time / subsequent number of page hits)

- Qualitative behaviour after Focus Item ("What do people next, after Focus Item has been displayed?")

- Conversion quality (like demographics, follow-up conversion rates, average purchase volume / lifetime value / opportunity size, ...)

- Moreover, for some of these criteria, we should not just compare one Focus Item to another, but also to the "no Focus Item at all" option - quite an important aspect! Plus we need to make sure that we compare apples to apples... Example: For a Focus Item that gets displayed after 30 seconds, the results must only be compared to the no-FI behaviour of those visitors that remain on the page in question for 30 seconds or longer.

Tricky, to say the least. And not in Mautic's current toolbox. But very interesting nonetheless, so we decided to set up an A/B analysis "the dirty way", i.e. with some tricks plus some hard-coded statistics for the specific case. The goal not only being to answer questions for the project at hand, but also to collect experience, understand the pitfalls, and start working on the shapes of a generic solution within Mautic.

Implementation: Hacking an A/B Test for Mautic Focus Items

These are the details for the most complex case, the "Focus Item vs. no Focus item" comparison:

a) How to send a given percentage of visitors to each behaviour

In the absence of an actual randomiser in Mautic, the way we hacked this challenge is this:

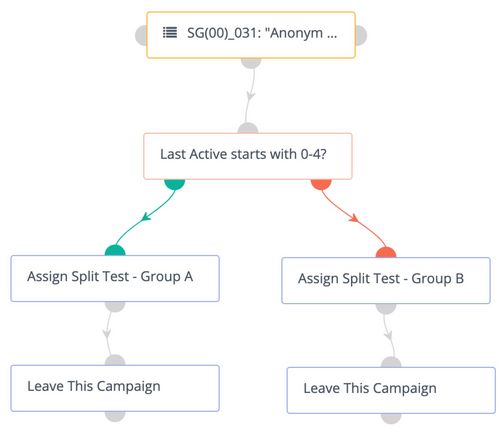

- Create a Segment for each split-test group

- Create a campaign for placing each contact in one of our split-test Segments, according to the desired distribution

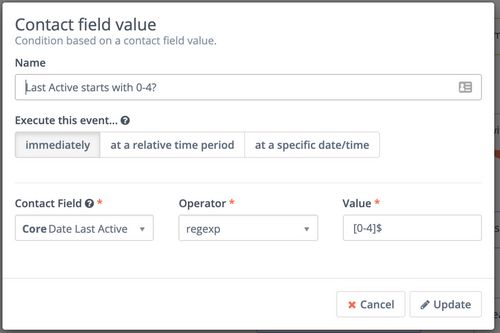

- Inside that campaign, create a "contact field value" condition that looks at the last digit of the contact's "Date Last Active", which is evenly distributed. Thus a Regex "[0-4]$" will give us a random 50%, and obviously other splits are easily possible this way.

- Deliver Focus Items through campaigns that take the contact's split-test Segment into account

For our example, we need: "Group A" = Focus Item , "Group B" = no Focus Item.

b) Work the database

The other part of the job is to retrieve all the necessary data directly from the database, and transform that data into the desired information

- For each Focus Item flavour (in our case: Contacts in "Group A" that have been delivered the Focus Item in question)

- But also for those contacts that are in "Group B", i.e. would have seen the Focus Item if they were in a different split group. What does this mean? Example: If the Fokus Item in question is set to "display after 30 seconds", we need to find Group B's pages visits to the same page time where users stayed for at least 30 seconds.

And all this for a timeframe that needs to be defined, of course.

What we created for these stats was a little PHP script that runs completely outside of Mautic, and returns CSVs that a human can work with. Good enough for now!

What are the Learnings?

As it is frequently the case for Mautic's on-page elements, the delay between user's behaviour and campaign action can be a bit of a drag. More frequent cron jobs can improve the situation (where possible), but optimised campaign design will help, too.

When it comes to "what should an Mautic-internal A/B testing look like?", it makes sense to have a longer-term vision in mind (maybe inspired by the specialised tools like "Google Optimize", but start much smaller with just a simple comparison and a rather narrow definition of "performs best", i.e. hypothesis.)

Another nice thing would be to have a "void" Focus Item that allows us to do a with/without comparison more easily, even for "triggered" Focus Items (exit intent, scrolling, ...)

And of course it should generally be in line with the look & feel of Email A/B testing.

Obviously, the current "dirty" implementation is hard work and absolutely not for everyone. However, we are able to do actual real-life tests this way (rather than blind flying) - therefore it is tremendously helpful for our customers already. Plus we are building the experience needed for a future implementation inside of Mautic.